A.I. as Artisanal Intelligence

And the opportunity for the digital Atelier.

I have spent most of the last couple of months being a complete beginner in using AI rather than in the idea of it. I have approached technology in the way preschoolers approach it—not by trying to understand it but by playing with it. I have subscribed to Claude, ChatGPT, Perplexity, and now Gemini to test its “Deep Research” element, and am now beginning to understand Apple Intelligence.

Once I got past feeling like a complete numpty who was “doing it wrong”, I started to enjoy myself. I asked questions of one platform, refined it in another and asked another to review it. I challenged it on its sources and started to use it as a coach and mentor. Gradually, I have come to develop a sense of what it’s good at, where it’s not, and what that might mean for individuals using it. I have watched and read those who have been around the technology for a couple of years and have proven to be great mentors.

I have used it to support my work by creating plans to act as client discussion frameworks. I have been impressed and humbled by the technology’s ability to develop versions for discussion in a fraction of the time I would take. (where we used to have “best practice” we can now develop plans tailored to individuals and circumstances that can be reviewed and updated continuously that have much more life and relevance)

So, what have I learned so far?

Firstly, I am beginning to understand how powerful AI is to do the first eighty per cent of a lot of the work we do on a regular, repeat basis. That those who sit in organisations between the producers and the users, who write reports, check quality, conduct HR appraisals, do routine accounts, data analysis, sales and the rest of the operational overhead that is part of the baggage of any organisation, face an existential threat. AI in its various guises, particularly when configured as an agent, has their number. Unless they can get past the routine of doing enough to get by to genuinely adding novel, incremental value, they will become superfluous, uncomfortably quickly.

Secondly, AI’s ability to leverage the talents of the producers by offering them tools and capabilities that previously needed an organisation to access. Using the same agentic processes, they can create their own virtual teams. As a writer, I can create researchers, sub-editors, reviewers, and a whole editorial team. Any moment now, Substack will be launching a platform capability that will enable those with the ideas and connection to not just compete with, but circumvent tradtional media.

See this ftom Ted Gioia

Thirdly, to use it effectively, I must master the domain in which I use the technology. As good as it is, it is relatively promiscuous in selecting its sources, putting quality and originality at risk. Also, effective use requires strong prompt discipline, which in turn requires a deep knowledge of what we are exploring. Unless we do, AI will regress to the mean. The old acronym GIGO—Garbage In Garbage Out—is alive and well and has moved to AI.

Lastly (for now), small groups have exponential potential to leverage technology's abilities. They can be large enough to bring diverse perspectives and small enough to build strong, trusted relationships and hold the sort of dialogues that uncover insight.

By their nature, large groups cohere around systems and processes designed for efficiency and can often be reluctant to embrace ideas that disrupt them, resulting in them changing more slowly than their environment, and can more easily fail to see the emergent.

I've been using this as a conversation starter on different types of uncertainty with those I work with, and think “Artisanal Intelligence” has a place:

I’m considering Artisanal Intelligence as complementary to Artificial Intelligence. Artificial Intelligence is a powerful tool for accessing and manipulating our known knowns and embracing our known unknowns, whilst Artisanal Intelligence is a great way to explore our unknown knowns and venture into mysteries in search of inspiration.

It’s a challenging space because I suspect those who use artificial intelligence as a solution rather than a tool are travelling an easy path towards becoming average (after all, technology has no regard as to who it works for and will supply pretty much the same answers to the same questions regardless of who asks)

I think the space for the artisan lies in boxes 2 and 3. It can be accessed by crafting questions to uncover material to work with, not easy answers.

This asks another question - where does this happen?

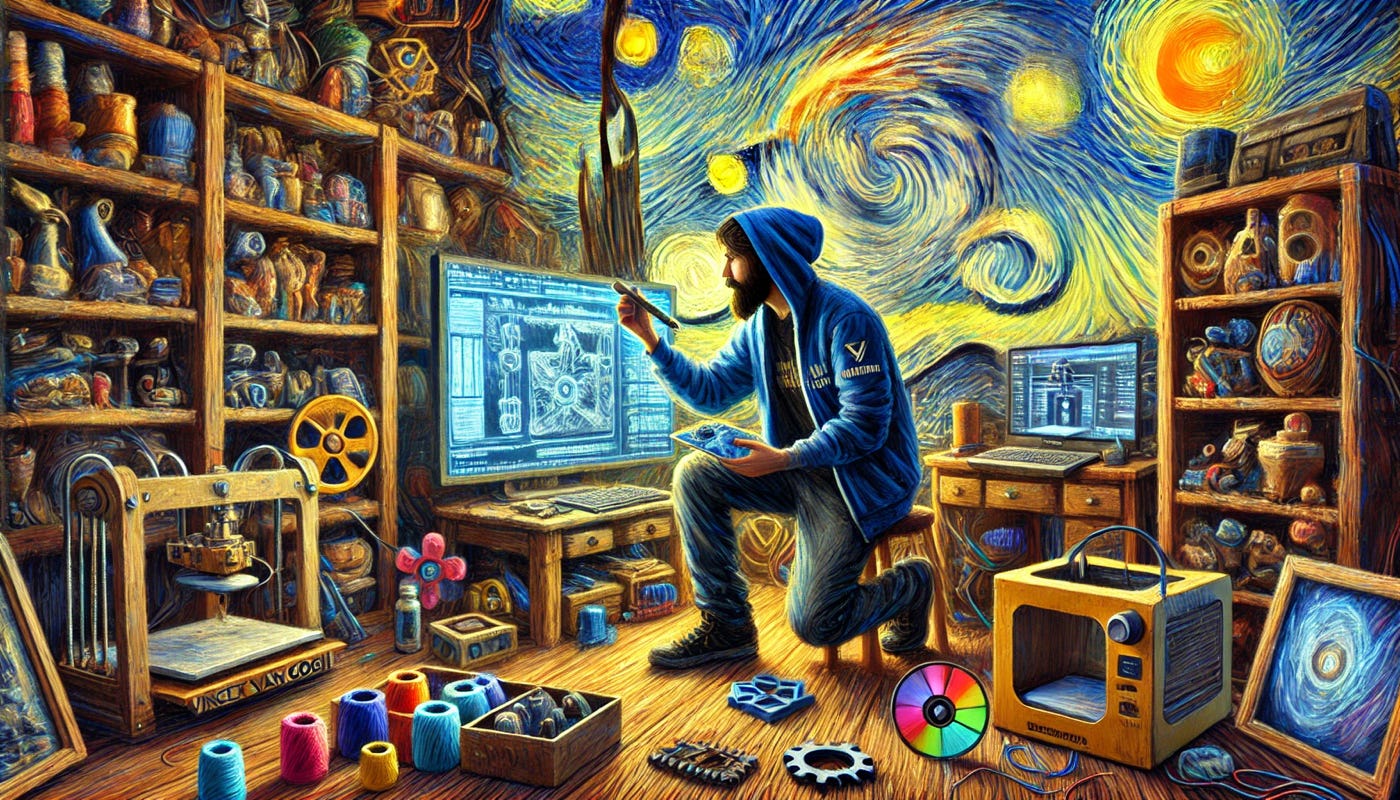

I have in mind a modern-day atelier. Historically the birthplace of Renaissance Art, I see them in the context of a modern workshop for creative digital craft. In this collaborative space, skilled individuals and teams use contemporary digital tools and technologies to design, create, and refine innovative works. Blending the traditional ethos of craftsmanship — such as attention to detail, artistry, and mastery — with modern digital techniques like coding, 3D design, animation, or digital fabrication. A modern atelier serves as a hub for experimentation, powered by conversation, insight, interdisciplinary collaboration, and the production of ideas that bridge the gap between artistry and cutting-edge technology.

Few large organisations can cope with this space without suffocating it with process, evaluation, and bureaucracy. So, I wonder how we might create the conditions for conversations that venture “outside the walls” to thrive, connect, and multiply without being suffocated by the weeds of short-term performance. Where do we have conversations that evoke properties beyond efficiency and performance, venture into beauty and purpose, and uncover alternative paths to the necessary growth conditions?

The current experiment with Mighty Networks is a playground open to ideas and experiments, and a week and a bit in it is throwing up interesting, part-formed ideas. We‘ll see where it takes us.

As we head into 2025, I think these are questions that matter.

Thank you for the time and effort you’ve given to reach this level of understanding.

I have been so thoroughly absorbed and impacted by Iain McGilchrist’s asymmetrical hemispheric brain perspective that I see AI as left brain thinking on steroids. The temptation is to stop at what it gives as a settled point of knowledge. In essence, this what is known. But, it isn’t. Human experience teaches us other things. Our awareness sometimes comes without effort as flashes of insight. I had one of those Sunday morning at church in the midst of singing Mendelsohn’s Vom Himmel Hoch cantata. One of the hardest intellectual challenges of awareness and presence that I’ve ever faced. The insight was the culmination of, at one level, a year’s worth of thought, at another six month’s of study. My question is how do we test AI knowledge against human experience. If McGilchrist is correct that left brain thinking is narrow and exclusive - you suggest that it is - and right brain thinking is embodied and intuitive, and the greater form of knowledge, how do we proceed? We can’t avoid the question because all organizations will eventually be trusting -hear me when I say this - their way of using AI. Efficient use will lead to mediocre results. From my perspective, while AI maybe this huge technological advance, humans will still be the ones using it. Its value is determined by human performance. So, how does this idea of Artisanal Intelligence really address the dimension of human development in the future?